23. September 2022 By Marc Mezger

A brief introduction to explainable AI

What is explainable AI?

With tools such as Siri and Alexa, artificial intelligence (AI) is now firmly entrenched in society, and it is used by many people without them even realising it. To assess whether these systems are fair, unbiased and do not discriminate, we first need to understand them, which is the aim of being pursued with explainable AI. As a research field, explainable AI (XAI) focuses on the interpretability of machine and deep learning methods. There has been a need for research in this space since the early days of expert systems. This is becoming increasingly difficult and, at the same time, much more important today in view of the complexity of neural networks, because they allow for much more complex tasks and abstractions. Google states the following in its white paper on AI: ‘The primary function of explanations is to facilitate learning better mental models for how events come about’ (https://storage.googleapis.com/cloud-ai-whitepapers/AI%20Explainability%20Whitepaper.pdf). This refers primarily to the fact that in the human learning process, understanding is improved iteratively. The white paper then goes on to say: ‘People then use these mental models for prediction and control. It is natural then to expect people to ask for explanations when faced with abnormal or unexpected events, which essentially violate their existing mental model and are therefore surprising from their point of view.’ This reinforces the idea that a person’s mental model is used for comparison with the real model. If there are any discrepancies between the two, explanations are needed to adjust the mental model or to maintain trust in the process.

In summary, there are five reasons why explanations of machine or deep learning models are necessary:

- Support for human decision-making: This means there are ways to explain the AI’s decisions in a way that is understandable to a human and, above all else, make it possible to understand which combination of input parameters leads to a decision. The larger and more complicated the models are, the more complicated this will be. As an example, a doctor needs to understand why the AI is suggesting a therapy in order to explain a planned course of treatment to a patient.

- Greater transparency: Transparency allows the user to build trust in the system. If he or she is able to understand how the model acts or how it comes to a decision, this creates a shared understanding between AI and humans, which in turn leads people to accept and trust the AI.

- Debugging: This refers to the ability to debug a system, for instance, when an error occurs or the AI model acts unexpectedly. Let us take an example: a loan application from a young professional with a good salary is declined, and it is important for you to understand why this occurred in order to optimise the model.

- Auditing: This relates to the ability to prepare for future regulatory requirements, with the upcoming AI Act being of particular relevance here. You can read more about this in the following blog post. It is important that a model is understandable in order to assess whether it is dependable.

- Verification of generalisability and trust: When it comes to AI models, it is important to know whether they are able to generalise, which is necessary in order for people to trust the system. This is part of an important field of research called trustworthy AI, which has already been explained in greater detail in the blog post mentioned above.

The role of trustworthy AI

While my colleague and adesso expert Lilian Do Khac has already described trustworthy AI in the blog post described above, I will provide a short summary since this is very important for understanding explainable AI: Trustworthy AI is a framework that classifies the goals of human oversight, technical robustness, privacy and data governance, transparency, fairness, social and/or environmental wellbeing and accountability as essential in order to build trust in an AI system. Without the necessary trust, AI will not gain acceptance over the long run, making its use problematic in practice and leading to regulatory issues in future.

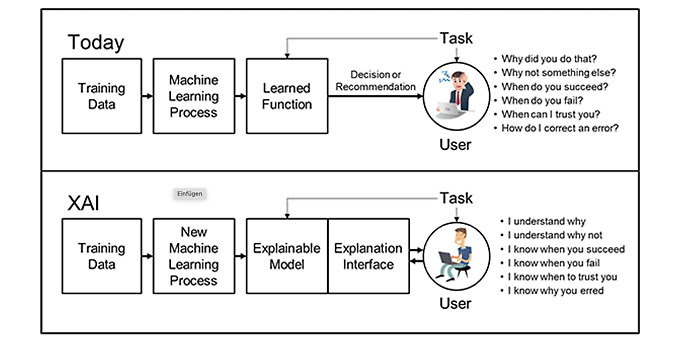

Der Nutzen von Explainable AI (Quelle: https://www.darpa.mil/program/explainable-artificial-intelligence)

The diagram above illustrates how the current process is structured without explainable AI. In this process, training data is used to train a machine learning algorithm. The goal is to separate different classes of data or generate numerical predictions. This trained decision logic is used to make a decision regarding the prediction when new data is received. The prediction is sent to the user, but without any explanation as to why the decision was made. This leads to questions like why was this decision made and how can you trust the model if you do not know how the decision was made.

With explainable AI, in addition to the trained model, there is also an explanatory model with an interface for the user, so that they are able to understand why a model has made a particular decision. This creates a certain level of trust, as the decision-making process is made transparent.

Why is explainable AI needed, and why is it more than just an interesting topic in the research realm?

Generally speaking, there are three standard use cases that can be used to illustrate why explainable AI is essential and should not be ignored:

- The first use case involves the use of AI to detect diseases in the medical context. One example of this would be an AI that automatically searches computer tomography images for the existence or non-existence of cancer cells. Since an AI makes a decision about which images the doctor should examine more closely, it must be possible to explain how the AI arrives at its decision, as doctors generally never trust a system that cannot explain the decisions in a way they can understand and because a system like this must be optimised to find as many patients as possible who are suspected of having cancer. At the same time, however, the AI must send as few false images as possible in order not to overload medical staff or generate errors.

- The second use case relates to credit scoring and, more generally, to the topic of liquidity forecasting. Here, an AI predicts the risk that a person with certain characteristics (job, salary, age and so on) will or will not be able to service a loan of a certain value. Explainability is important in order to avoid violating paragraphs 1 and 2 of the General Equal Treatment Act, which deal with the issue of discrimination. In addition, it is also important to understand how a decision is made to avoid discriminating against or disadvantaging certain individuals with a given characteristic, such as a person who is of advanced age.

- The third use case is predictive policing, which is an AI-driven approach to predicting crime. It can be used by the police to identify crime hotspots and/or to predict the likelihood that certain individuals will commit a crime. One company that specialises in predictive policing is Palantir, which works in partnership with the police in North Rhine-Westphalia and Bavaria, among other things. Predictive policing software is already being used in Germany. Since the models underlying the predictions often come from the US where there are major problems with racial profiling and discrimination, it is extremely important to understand how a model arrives at a prediction.

There are many other examples of why explainable AI is important for the use of machine and deep learning algorithms in practice. However, this is beyond the scope of this blog post, so unfortunately I will not be able to provide more in-depth information on the topic at this time.

How explainable AI works

Investigation of Black Box Model

A majority of the machine and deep learning methods being used are typically black box models. This means it is unclear what exactly is happening inside the model, making it difficult to understand how the decision was made. Examples of black box models include neural networks (deep learning), support vector machines (SVMs) and ensemble classifiers. On the opposite end of the spectrum, there are white box models, which make it easier to understand decisions because they are based on simpler procedures and/or involve far fewer parameters. Examples of white box models include rule-based classifiers and decision trees.

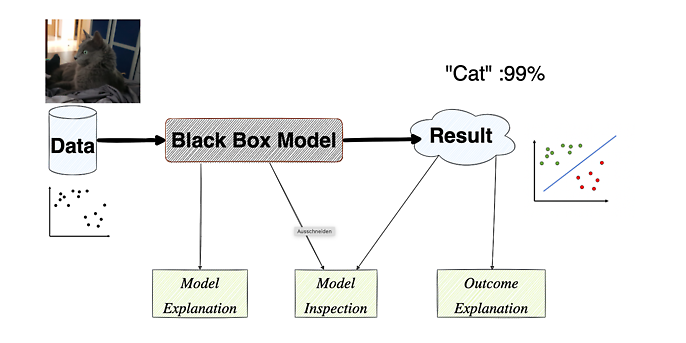

The second diagram illustrates the typical structure of a machine learning application. On the left we have data such as images, numerical data or other types of data. These pass through a model – in this case, a black box model because it is not clear what is happening inside the model. A result is then output, for example, there is a 99 per cent likelihood that an image is a photo of a cat or a prediction of a boundary for numerical data. There are three ways to apply explainable AI in a construct like this:

Model explanation: This involves explaining the model, not just an individual decision of the model. One way to do this is to use an interpretable machine learning model that is trained on the output of the black box with the input of the test variables. This allows you to simulate the black box and use a white box model to understand and explain the results. Model explanation focuses on the model and the results generated by the black box model.

Model inspection: The aim here is to create a representation to explain the properties of the black box model or predictions. This can be done, for example, by using the output of test instances to generate a graph. For instance, the sensitivity to variable changes would be one way to visualise the model representation as a graph.

Outcome explanation: This involves providing an explanation for a single input. In other words, it is not about understanding the model as a whole. It simply provides a rationale for a decision. For example, you could employ a decision tree whose relevant path is traced and used as an explanation.

Generally speaking, explainable AI is relatively easy to implement for machine learning methods because it involves a comparatively small number of components making a decision. In the case of deep learning and the neural networks it uses, this is much more difficult, since the number of components can easily run into the thousands or millions. One of the most popular networks for natural language processing – GPT-3 – has 175 billion parameters, which makes explainable AI significantly more difficult, though not impossible.

Examples of explainable AI

I would now like to provide a few examples of what explainable AI might look like and how it can be used to explain or understand decisions. While this is not a detailed, standardised procedure that we use with our applications, it should provide a general oversight into simple options that are available. I will be providing a more detailed description of these procedures along with examples in a separate blog article.

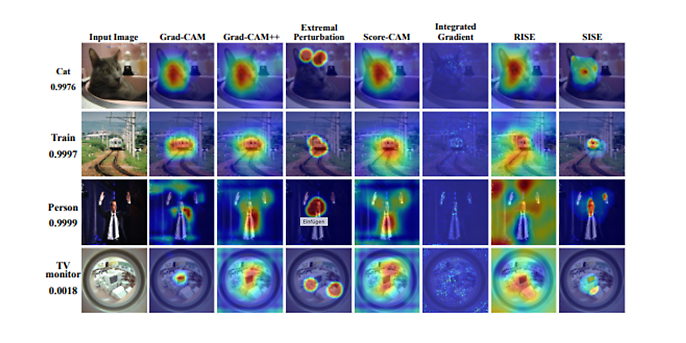

Heatmap-Methoden für Convolutional Neuronal Networks (Quelle: https://arxiv.org/pdf/2010.00672.pdf)

In the diagram above, the input image that is transferred to a convolutional neural network (an image recognition network) can be seen on the left, with heat maps shown on the right. These show the sections of the image that are most important for the network’s decisions. The redder the section of the image is, the more important it is. This example clearly shows that the network often focuses on the face of a cat or person.

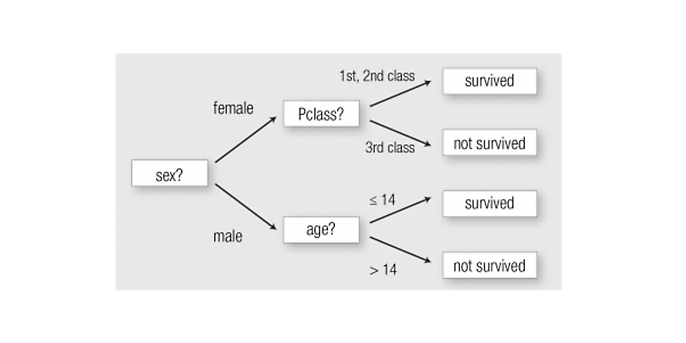

The diagram below shows the decision depicted in a decision tree. The explanation for the decision on the predicted survival of passengers travelling on the Titanic is shown as an example. Decisions can be made based on different variables that are queried.

Erklärung für die Entscheidung für die Überlebensvorhersage bei Titanic-Passagieren (Quelle: https://dl.acm.org/doi/fullHtml/10.1145/3236009)

With these two examples, I would like to conclude my short excursion into the world of explainable AI. In a future blog post, I will be presenting other techniques, with a primary focus on computer vision and natural language processing. So stay tuned!

You will find more interesting topics from the adesso world in our latest blog posts.